DiFaReli : Diffusion Face Relighting

: Diffusion Face Relighting

VISTEC - Vidyasirimedhi Institute of Science and Technology

Rayong,

Thailand

ICCV 2023

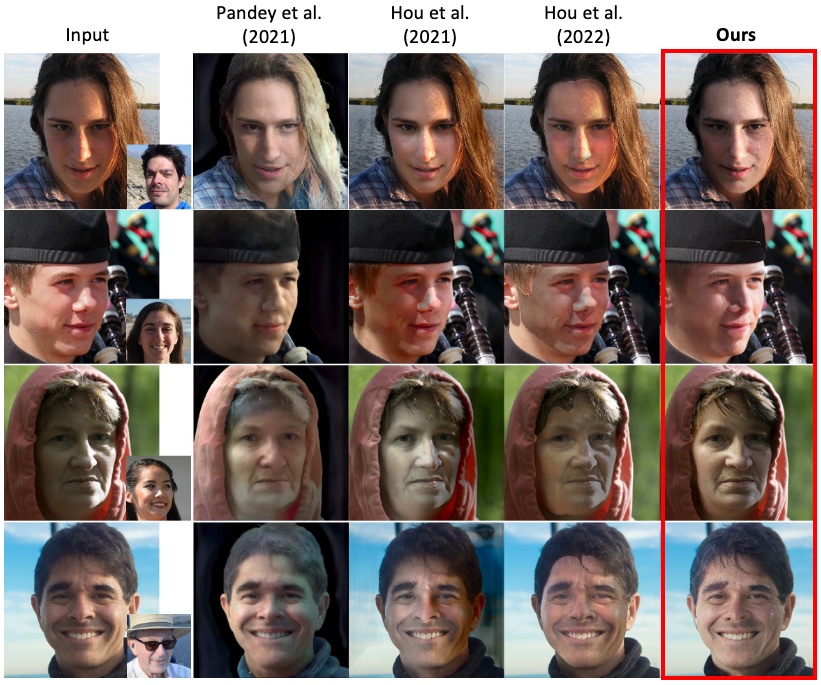

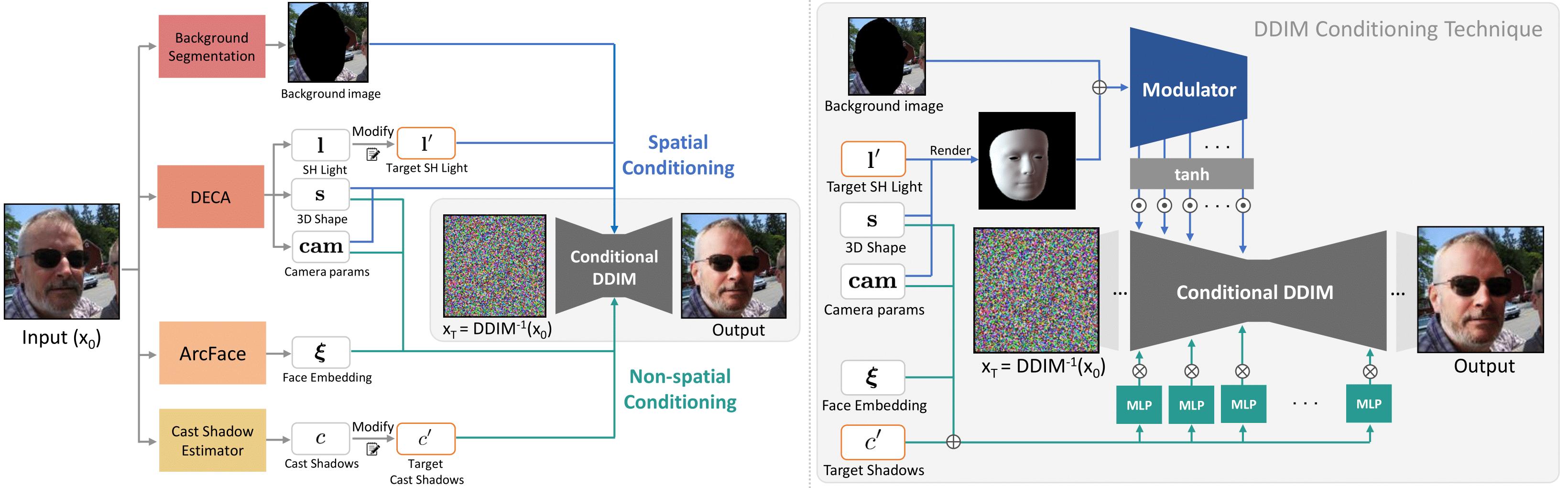

by improving shadow consistency and enabling relighting in a single network pass.

Code

Code